Top 3 Fine-Tuned T5 Transformer Models

In this article I'll discuss my top three favourite fine-tuned T5 models that are available on Hugging Face's Model Hub. T5 was published by Google in 2019 and has remained the leading text-to-text model within the field of NLP. The model is so capable that it is amongst the few models that are able to outperform the human baseline on the General Language Understanding Evaluation benchmark.

Google has been kind enough to release the weights for T5, which can be accessed through Hugging Face's Model Hub. So, anyone can download and fine-tune their own version of T5 and then release their trained model to the world for others to use. In this article, we'll discuss three of my favourite fine-tuned T5 models that you can use right now in just a few lines of code.

We'll use my very own Happy Transformer library. Happy Transformer is built on top of Hugging Face's Transformers library and makes it easy to implement and train Transformer models with just a few lines of code. If you're interested in learning more about fine-tuning models with just a few lines of code, you can check out this tutorial that explains how to fine-tune a grammar correction model.

Happy Transformer

Happy Transformer is available on PyPI and thus can be installed with a simple pip command.

pip install happytransformerT5 is a text-to-text model, and so we need to import a class from Happy Transformer that allows us to implement text-to-text models called HappyTextToText.

from happytransformer import HappyTextToTextVamsi/T5_Paraphrase_Paws

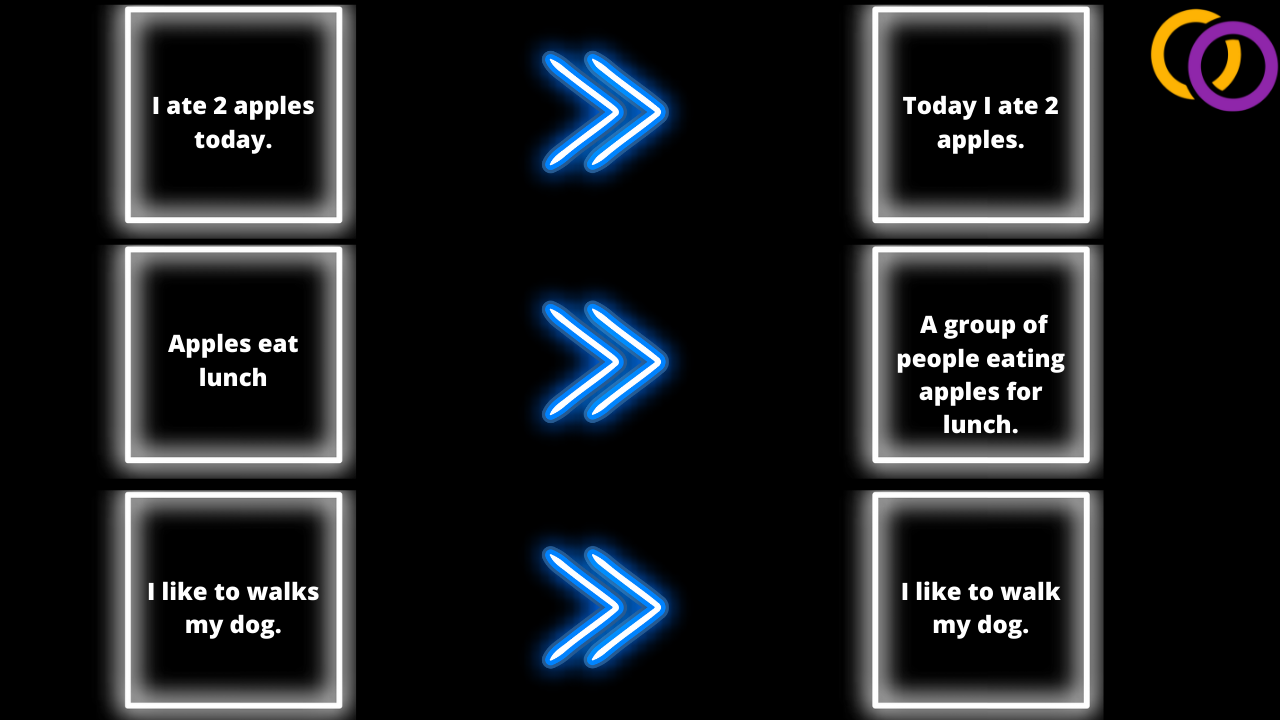

The first model we'll discuss paraphrases text. So, given a text input the model rewrites it to retain the same meaning while using different words. The model was trained with Google's PAWs dataset and has been downloaded over 78 thousand times.

We'll start by downloading and instantiating the model by creating a HappyTextToText object as shown below. The first position parameter is for the model type which is simply "T5" while the second parameter is the model name which is "Vamsi/T5_Paraphrase_Paws."

happy_paraphrase = HappyTextToText("T5", "Vamsi/T5_Paraphrase_Paws")We can use different text generation settings, which will result in different outputs. We will not focus on text generation settings in this article and instead use the recommended settings by the author of the model.

from happytransformer import TTSettings

top_k_sampling_args = TTSettings(do_sample=True, top_k=120, top_p=0.95, early_stopping=True, min_length=1, max_length=30)From here, we can begin generating text using happy_paraphrase's "generate_text" method. We must provide the text we wish to paraphrase to the first position parameter and the arguments to the parameter called "args." We also need to add the text "paraphrase: " to the beginning of the text to specify which task the model should complete and the text " </s>" to the end.

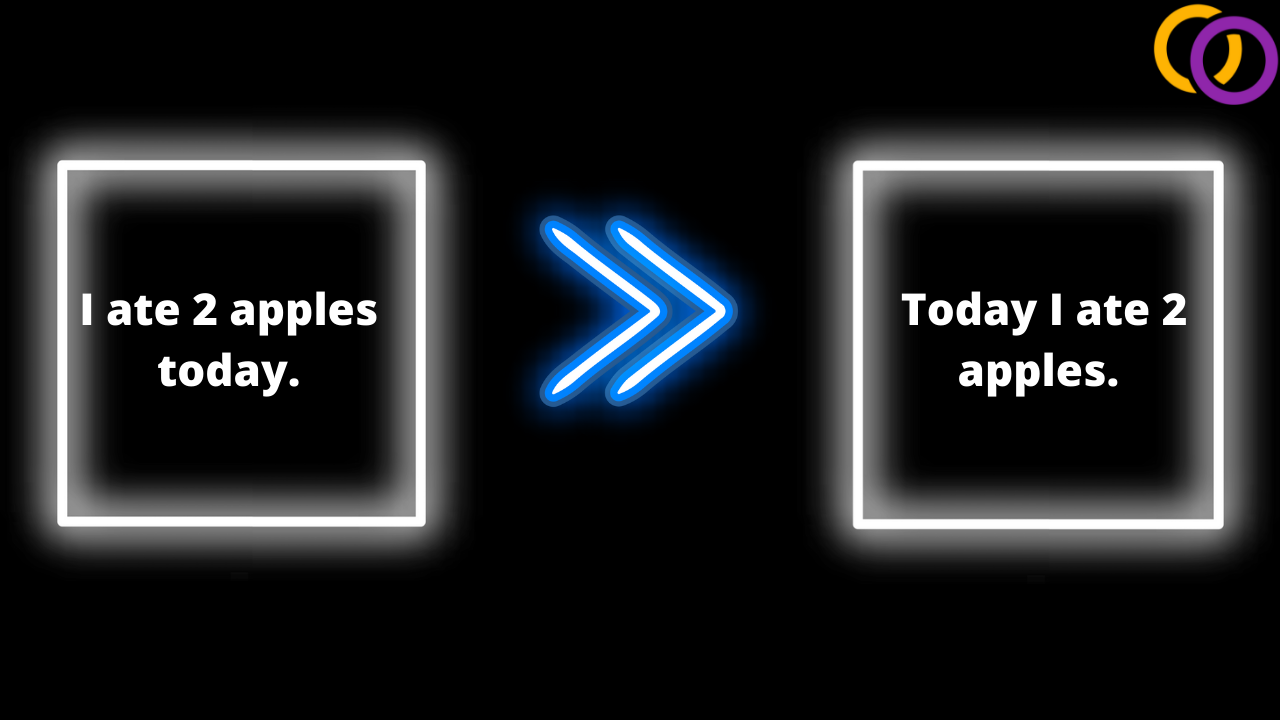

text = "I ate 2 apples today."

input_text = "paraphrase: " + text + " </s>"

result = happy_paraphrase.generate_text(input_text, args=top_k_sampling_args)The result is a dataclass object with a single variable called text which we can isolate as shown below.

print(result.text)Result: Today I ate 2 apples.

mrm8488/t5-base-finetuned-common_gen

The next model we'll discuss takes in a set of words and then produces text based on the words provided. So, perhaps you have a few keywords for text you wish to produce, then you can use this model to generate text relating to those keywords.

happy_common_gen = HappyTextToText("T5", "mrm8488/t5-base-finetuned-common_gen")We'll use an algorithm called beam search to generate text. Remember to first import TTSettings if haven't already.

beam_args = TTSettings(num_beams=5, min_length=1, max_length=100)

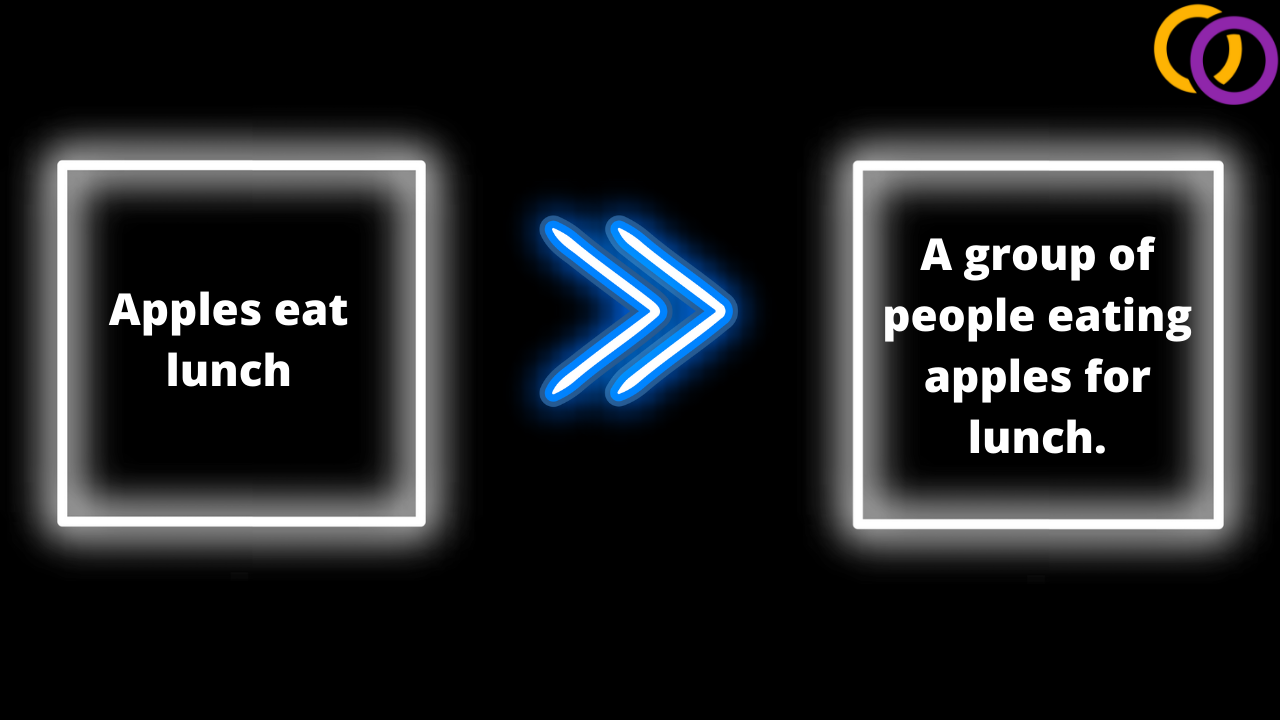

input_text = "Apples eat lunch"

result = happy_common_gen.generate_text(input_text, args=beam_args)

print(result.text)Result: A group of people eating apples for lunch.

vennify/t5-base-grammar-correction

Grammar correction is a common task in NLP. Perhaps you want to provide recommendations to your users to improve their writing, or maybe you want to improve the quality of your training data. In either case, you can use the model we're about to describe.

This model was created by me and was trained using Happy Transformer. You can read a full article on how to train a similar model, and another article on how to publish the model to Hugging Face's Model Hub

happy_grammar = HappyTextToText("T5", "vennify/t5-base-grammar-correction")

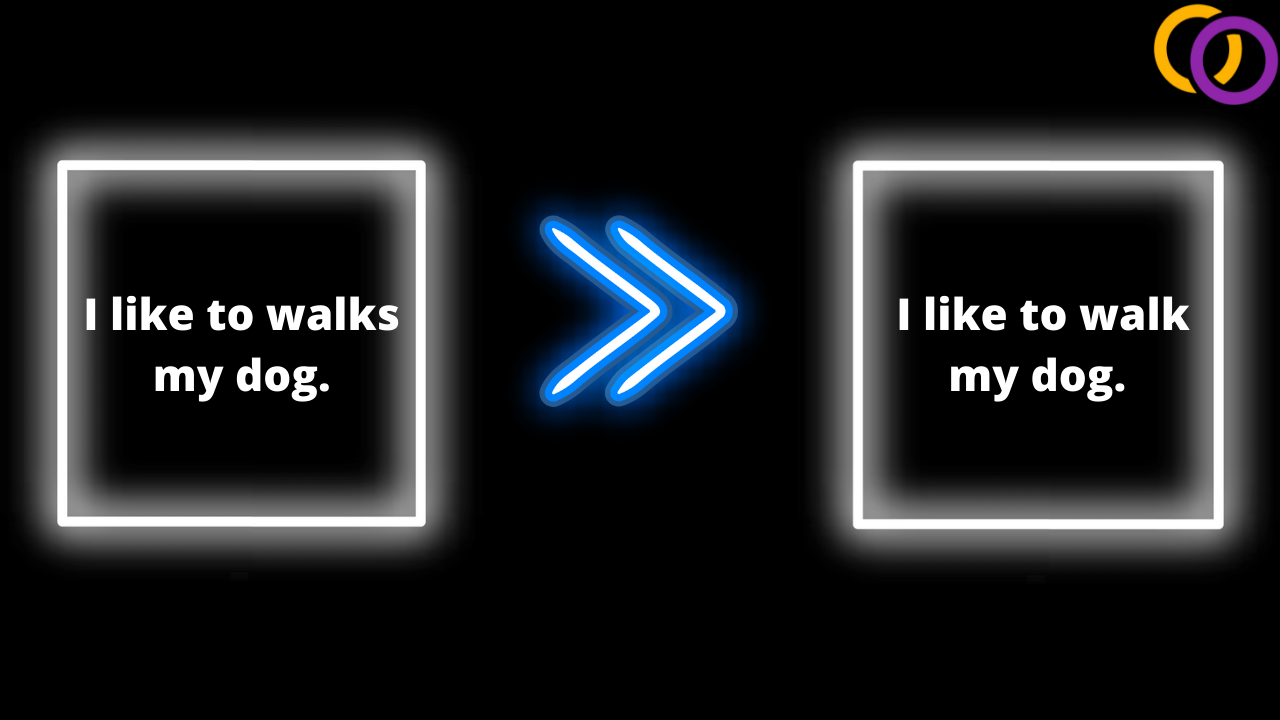

text = "I like to walks my dog."

input_text = "grammar: " + text

result = happy_grammar.generate_text(input_text, args=beam_args)

print(result.text)text = "I like to walks my dog."input_text = "grammar: " + text

result = happy_grammar.generate_text(input_text, args=beam_args)

Result: I like to walk my dog.

Conclusion

And there we go, we just discussed my top three favourite T5 models. Now, I encourage you to fine-tune and release your own Transformer models to the world. This way, you'll be truly be helping the NLP community while enhancing your skills. Once again, here's a tutorial on how to fine-tune a grammar correction model which may inspire you to release your own similar model. Maybe one day I'll write an article on one of your models, and stay happy everyone!

Code

Here's a Google Colab that contains all of the code discussed in this article.

Course

If you enjoyed this article, then chances are you would enjoy one of my latest courses that covers how to create a web app to display a Transformer model called GPT-Neo with 100% Python. Here's the course with a coupon attached.

Discord

Join the Happy Transformer Discord server to meet likeminded NLP enthusiast.

YouTube

Check out my YouTube channel for more content on NLP.

Happy Transformer

Support Happy Transformer by giving it a star 🌟🌟🌟

Book a Call

We may be able to help you or your company with your next NLP project. Feel free to book a free 15 minute call with us.