Self-Talk: Obtain Knowledge From Text Generation Transformer Models

Text generation Transformer models are truly impressive. They first sparked the public eye when OpenAI deemed one of its models to be too dangerous to release, called GPT-2. They inevitably released this model, including its largest version, which you can now use with just a few lines of code. Since then, these models have grown considerably in terms of both size and capability. Now, OpenAI's latest model, called GPT-3, can perform basic arithmetic and generate realistic news articles.

This article will focus on one of the latest applications of text generation Transformer models – knowledge generation. From a high level, these models are quite simple; they simply attempt to continue the text you provide them. Now, what if you provide the model with a question? Well, the model will continue the text and, as a result, typically attempt to answer the question. By doing so, they will be levering the knowledge they learned during training to produce information. By the end of the article, you will know how to implement state-of-the-art AI models to perform this task with a minimal amount of Python code.

Discovery

The Allen Institute for AI(AI2) was the first to discovered this application of Transformer models and named it self-talk [1]. My undergraduate capstone project team independently discovered a method very similar to the method proposed by AI2 prior to learning about their paper. So, I have insights to share from both my capstone project and the paper by AI2.

Basic Implementation

In this tutorial, we'll implement a slightly simplified version of self-talk. We'll use my very own Happy Transformer Python package, which is a wrapper on top of Hugging Face's Transformers library. Happy Transformer allows you to implement and train Transformer models with just a few lines of code – including text generation models, which we'll use for this tutorial.

Installation

First off, Happy Transformer is available on PyPI, and thus we can pip install it with one line of code.

pip install happytransformerDownload Model

Now, we're going to import a class called HappyGeneration from Happy Transformer to download a text generation model.

from happytransformer import HappyGenerationFrom here, we can load a fully open-sourced version of GPT-3 called GPT-Neo. There are currently three GPT-Neo models of different sizes available on Hugging Face's model distribution network. We'll use the second largest (or second smallest if you're feeling pessimistic) model, which has 1.3B parameters. Here's a link to the other models if you wish to use them instead.

To create a HappyGeneration object, we must provide the model type to its first position parameter and the model name to its second. In this case, the model type is "GPT-NEO" and the model name is "EleutherAI/gpt-neo-1.3B" as shown in the top left of the model's webpage.

happy_gen = HappyGeneration("GPT-NEO", "EleutherAI/gpt-neo-1.3B")Text Generation Algorithm

There are different text generation algorithms you may use to produce text. Now, the purpose of this article is not to give an in-depth explanation of different text generation algorithms, but instead to describe how to implement self-talk. Of four different text generation algorithms my team tested, we found that the "beam search" algorithm was the most effective. This algorithm is deterministic, meaning each time you run it, the same result is reached.

To modify the settings, we must import a class called GENSettings. Then, we can modify various parameters to select and modify the algorithm we wish to use.

from happytransformer import GENSettings

beam_settings = GENSettings(num_beams=5, max_length=50, no_repeat_ngram_size=3)AI2 used an algorithm called top-p (nucleus) sampling with a p value of 0.5 to generate answers. Unlike beam search, this algorithm is non-deterministic, meaning each time you run it, it produces different text.

top_p_settings = GENSettings(do_sample=True, top_k=0, top_p=0.5, max_length=50)

Obtain Knowledge

We can now begin generating knowledge! Let's create a question and then use the text generation model to answer it. To accomplish this, we will call happy_gen's generate() method.

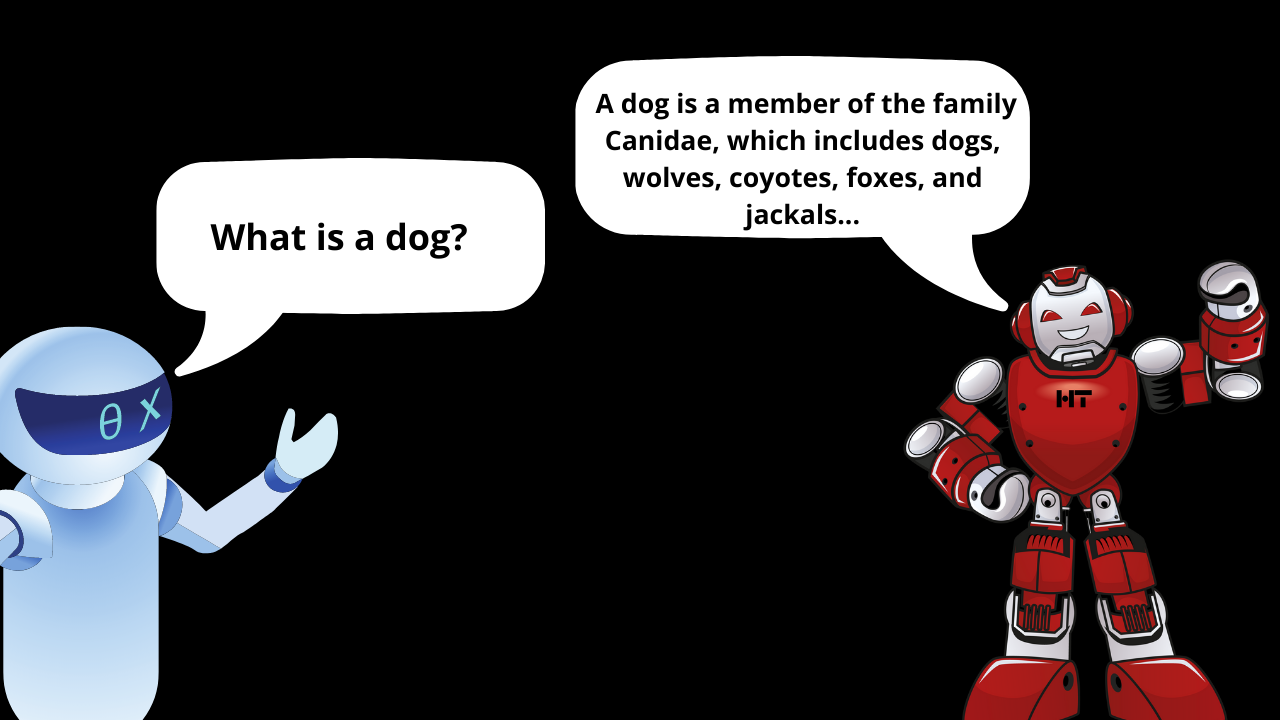

question = "What is a dog?"

result = happy_gen.generate_text(question, args=beam_settings)

print(result.text)Result: A dog is a member of the family Canidae, which includes dogs, wolves, coyotes, foxes, and jackals. Dogs have been domesticated for more than 10,000 years. They were first domesticated

Not bad! Now, if you were to use the top-p settings instead, it would lead to an original result each time you performed inference. Here's an example of using the top-p algorithm instead.

result = happy_gen.generate_text(question, args=top_p_settings)

print(result.text)Result: A dog is a domesticated animal that has been bred to be a companion, usually for the purpose of hunting, guarding, or working. Dogs are often called "pets" or "companions" because they are often raised for the

Include Context

I suggest you add additional context to your input if possible. For example, imagine you ask the model to define a word that happens to be a homonym. Then, the model may produce a definition for one of the word's meaning that does not apply to your context. Below are two examples of generating knowledge with and without adding context before the question.

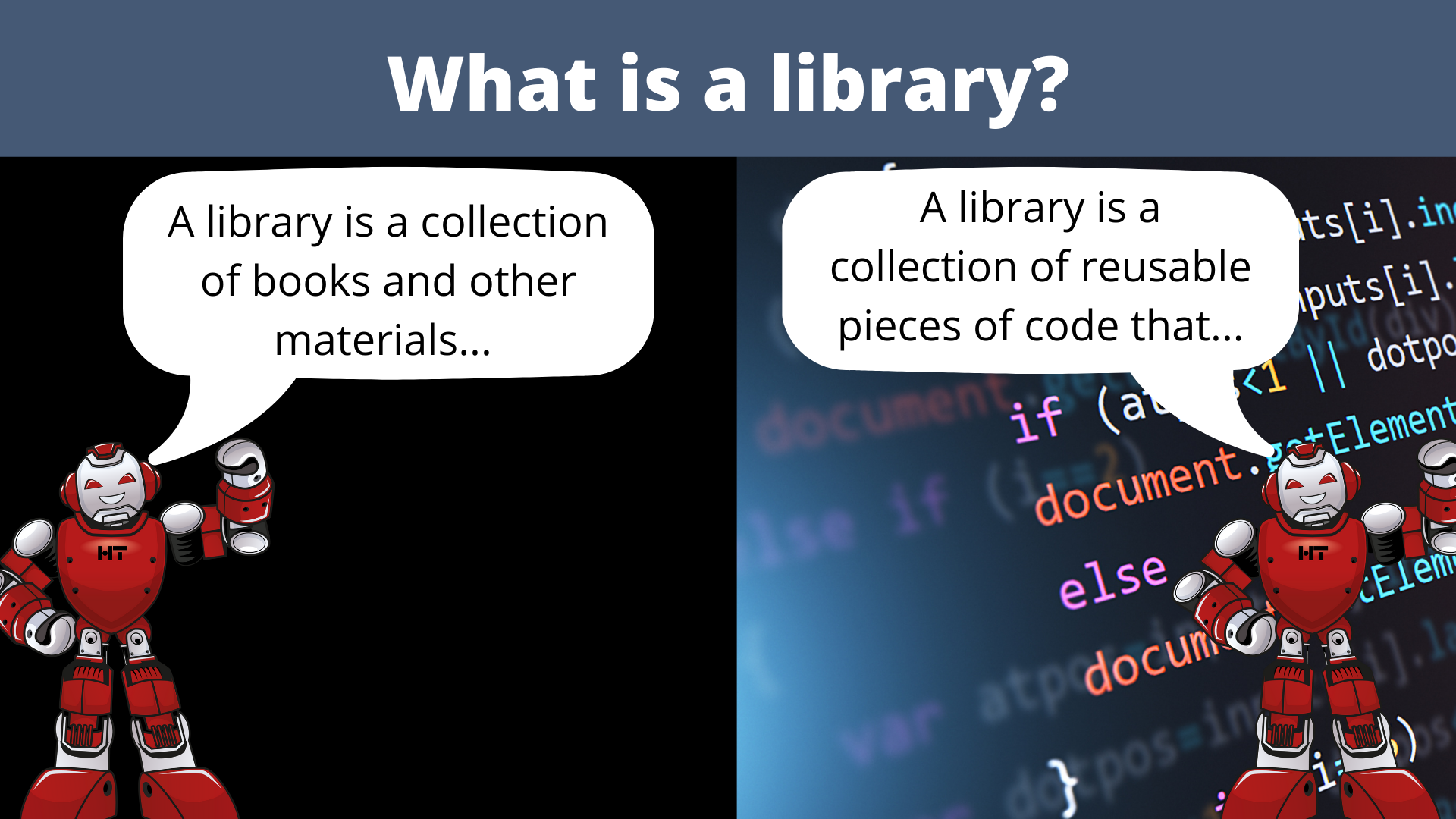

input_no_context = "What is a library?"

result_no_context = happy_gen.generate_text(input_no_context, args=beam_settings)

print(result_no_context.text)Result: A library is a collection of books and other materials that can be used for a variety of purposes, such as research, teaching, or entertainment. What is the difference between a library and a book store? A book store is a

input_with_context = "Happy Transformer is an open-souce Python package. What is a library?"

result_with_context = happy_gen.generate_text(input_with_context, args=beam_settings)

print(result_with_context.text)Result: A library is a collection of reusable pieces of code that can be used together to solve a common problem. For example, a library could be a set of functions that are used together in a program to perform a specific task.

As you see, by providing context, we helped the model narrow down what we meant by "library." So, I recommend you add context to your input when performing self-talk. Now, let's discuss how to generate questions automatically.

Automatically Generate Questions

Imagine you're given a piece of text, and you wish to generate additional background knowledge for it automatically through asking a language model questions. You'll have to understand the underlying text to craft quality questions that are both grammatically correct and relevant. A text generation Transformer model can be applied to solve this problem.

My capstone project team used named entity recognition to identify nouns within the context and then create a question for each one. For example, if the word "banana" was within the text, then the question "What is a banana?" would be generated. We found this method to be effective, but the team over at the AI2 proposed a more sophisticated approach to generate questions using a text generation model.

We'll discuss how to use the method proposed by AI2 to generate questions. They crafted prompts and then used a text generation model to continue the prompts to produce a questions. For example, they applied self-talk to a challenge called the Winograd Schema Challenge, which to describe briefly, involves predicting which noun an ambiguous pronoun is referring to. So, for example, given the sentence "The hammer did not fit into the toolbox because it is too big," the model must determine if it is referring to "the hammer" or "the toolbox." Below is a portion of the prompts AI2 used for the challenge. [1]

| Question Prompt |

|---|

| What is the definition of |

| What is the main purpose of |

| What is the main function of a |

They then appended the prompt to the context and had the model produce text. By producing text, the model would potentially produce a viable question related to the context. Below is an example of this process in code. We'll use top-p sampling with a p value of 0.2 and, at most, generating 6 tokens -- as suggested in the paper by AI2.

question_generation_settings = GENSettings(do_sample=True, top_k=0, top_p=0.2, max_length=6)

context = "The hammer did not fit into the toolbox because it is too big"

prompt = "What is the definition of"

input = context + prompt

q_g_result = happy_gen.generate_text(input, args=question_generation_settings)

print(q_g_result.text)

Result: a toolbox?

A:

Now, we can isolate the generated question with the following line of code.

# Get the location of the question mark if it exists.

# Results in -1 if not present

q_m_location = q_g_result.text.find("?")

full_question= ""

question_ending = ""

if q_m_location != -1:

question_ending = q_g_result.text[:q_m_location+1]

full_question = question_prompt + question_ending

print(full_question)

else:

print("question not generated. Try a different prompt.")Result: What is the definition of a trophy?

Answer Prefix

AI2 manually created an answer prefix for each question prefixes to help the model answer the question. Below is a chart that shows the various combinations of question and answer prefixes. [1]

| Question Prompt | Answer Prompt |

|---|---|

| What is the definition of | The definition of _ is |

| What is the main purpose of | The purpose of _ is to |

| What is the main function of a | The main function of a _ is |

Notice how each answer prompt has an underscore to indicate its subject. We can easily extract the subject with the code below, since the subject is simply the ending of the question other than the question mark.

subject = question_ending[:-1]

print(subject)Result: a toolbox

The prompt can be broken down into three components: the text that proceeds the subject, the subject and the text that follows the subject. So, these components can be combined as shown below.

answer_prefix = " The definition of" + subject + " is"

print(answer_prefix)Result: The definition of a toolbox is

Putting Everything Together

We have now learned everything we need to know to generate background information for any arbitrary text. Below is a final example of combining the various components we created to form a final input to the text generation model.

final_input = context + full_question + answer_prefix

print(final_input)Result: The hammer did not fit into the toolbox because it is too big. What is the definition of a toolbox? The definition of a toolbox is

AI2 recommends using top-p sampling with a p value of 0.5 to generate 10 tokens. Let's define these settings.

answer_generation_settings = GENSettings(do_sample=True, top_k=0, top_p=0.5, max_length=10)

We now have everything we need generate our final result.

answer_suffix = happy_gen.generate_text(final_input, args=answer_generation_settings).text

print(answer_suffix)Result: a box for storing and transporting tools. It is

The last step is to combine our prefix and suffix for the answer.

final_result = answer_prefix + answer_suffix

print(final_result)Result: The definition of a toolbox is a box for storing and transporting tools. It is

That's pretty good!

Note: you may want to apply basic data post-processing to extract only the first sentence.

Differences

The main difference between the method outlined in this article and the method outlined by AI2 is the amount of background information that is generated per context. For each context, they used at least 5 question prefixes. Then, for each question prefix, they generated 5 questions. And finally, for each question, they generated 10 answers. So that means, for each context, they generated at the very least 250 answers – that's a lot of background information!

Conclusion

And that's it! You just learned how to generate background information using a Transformer model. I believe this technology can be further improved and applied to other tasks. For example, perhaps fine-tuning could be applied to help improve the model's performance when it comes to generating questions and answers. In addition, I recently published an article that outlines a research idea that you are free to pursue related to self-talk. I'm looking forward to reading about how NLP researchers improve and apply self-talk!

Resources

Subscribe to my YouTube channel or sign up for my newsletter for more upcoming content on self-talk!

Code used in this tutorial

References

[1] V. Shwartz, P. West, R. Bras , C. Bhagavatula1 , and Y. Choi, Unsupervised Commonsense Question Answering with Self-Talk (2020), EMNLP 2020

Attributions

Ted Brownlow, Will Macdonald and Ryley Wells were part of my capstone project team.

Book a Call

We may be able to help you or your company with your next NLP project. Feel free to book a free 15 minute call with us.