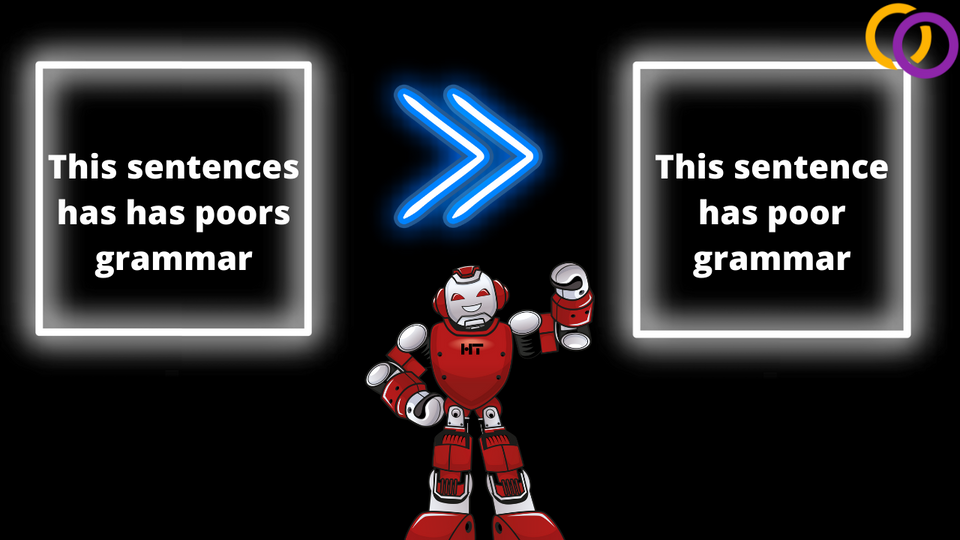

Grammar Correction With Transformer Models Made Easy

Grammar correction has many useful applications. Perhaps you wish to improve the quality of your data before fine-tuning a model. Or maybe, you want to provide grammar suggestions to text that a user submits. In either case, you can consider using a model I recently published that allows you to produce a grammatically correct version of inputted text. You don't need any prior experience in AI or natural language processing (NLP) to follow this tutorial. All you need is a simple understanding of Python, so let's get right to it!

We'll use a fine-tuned version of Google's T5 model. T5 is a text-to-text model, meaning given text, it produces a standalone piece of text. It is currently considered "state-of-the-art," and the largest model even outperforms the human baseline on the General Language Understanding Evaluation benchmark. The T5 model we'll use was fine-tuned on a dataset called the JHU FLuency-Extended GUG corpus, which is a renowned grammar correction dataset within the NLP community

The model is available on Hugging Face's model hub and can be implemented with just a few lines of code using a Python package I am the lead maintainer of called Happy Transformer. You can learn how to fine-tune your own grammar correction model within this article and I encourage you to publish your own grammar correction models to Hugging Face's model hub to help advance the NLP community.

Installation

Happy Transformer is available on PyPI and thus can be installed with a simple pip command.

pip install happytransformerModel

As I said, we'll use a T5 model, which is known as a "text-to-text" model. Thus, we'll import a class from Happy Transformer that allows us to use text-to-text models called "HappyTextToText."

from happytransformer import HappyTextToTextWe can now download and initialize the model from Hugging Face’s model hub with the following line of code. Notice how we provide the model type in all caps to the first position input and the model name to the second.

happy_tt = HappyTextToText("T5", "vennify/t5-base-grammar-correction")Generate

Let's initialize settings to generate text. We'll import a class called TTSettings and instantiate an object that contains the proper settings to use a generation algorithm called "beam search." You can learn more about the different text generation settings on this webpage.

from happytransformer import TTSettings

beam_settings = TTSettings(num_beams=5, min_length=1, max_length=100)

Now, let’s create text that contains multiple grammatical mistakes so that we can correct it using the model. We must add the prefix “grammar: ” to the text to indicate the task we want to perform.

Example 1

input_text_1 = "grammar: I I wants to codes."From here, we can simply call happy_tt's generate_text method and provide both the text and the settings.

output_text_1 = happy_tt.generate_text(input_text_1, args=beam_settings)

print(output_text_1.text)Result: I want to code.

Example 2

input_text_2 = "grammar: You're laptops is broken "

output_text_2 = happy_tt.generate_text(input_text_2, args=beam_settings)

print(output_text_2.text)Result: Your laptop is broken.

Conclusion

You just learned how to implement a state-of-the-art AI model to perform grammar correction. Here's a Colab file that contains all of the code covered in this tutorial. I suggest you play around with the Colab file and provide the model with your own inputs. Also, to enhance your learning, I suggest you fine-tune your own grammar correction model, which you can learn how to do within this article. Training your own grammar model is really quite easy due to how Happy Transformer abstracts the complexes that are typically involved with fine-tuning Transformer models. I hope you learned something useful and stay happy everyone!

Resources:

Interested in networking with fellow NLP enthusiast or have a question? Then join Happy Transformer's Discord community

Support Happy Transformer by giving it a star 🌟🌟🌟